Artificial Intimacy

Artificial Intimacy (2022)

Interactive Art

Demo at NordiCHI 10.10.2022

Harvard Art Museum 05.17.22 – 07.02.22

Journal for Digital Art History Spring 2023

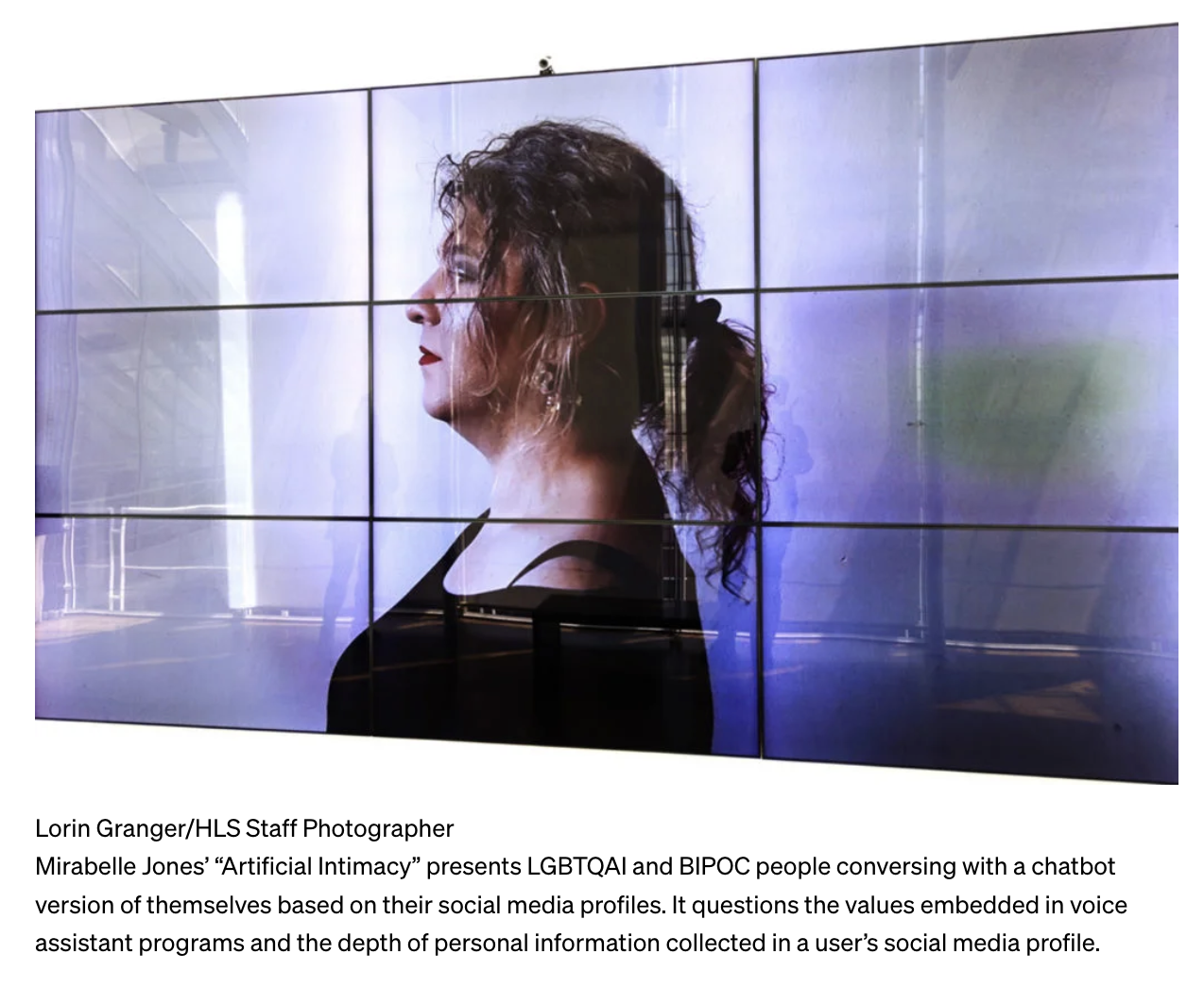

What does it take to render the self digital? Artificial Intimacy explores what digital personal assistants and the large language models that power them would be like if they were personalized to match our own personalities and values.

The presence of chatbots is ever increasing in our lives. But with this increase comes concern about the values and identities these chatbots do and do not represent. Journalists and researchers have brought worldwide attention to the fact that chatbots can exhibit racist or sexist behaviors based on their training data or lack thereof. In 2016, Microsoft’s chatbot Tay began to make racist comments after interactions with people on Twitter after only a day. In a TEDx talk by chatbot expert Sophie Hundertmark, the chatbot Einstein assumes the gender of an example child, one who is “successful at learning,” to be male. Chatbots are known for defaulting to female voices and often responding to abusive or sexist comments favorably or neutrally, reinforcing sexist opinions. At the same time, chatbots often fail to comprehend feminine voices with the same accuracy as masculine voices due to being under trained on feminine voices. If our goal is to create chatbots that are increasingly human-like, how can we ensure that chatbot technologies bear the personalities, traits, values, and identities that are representative of the rich diversity of human beings and do not replicate stereotypes or under-represent communities

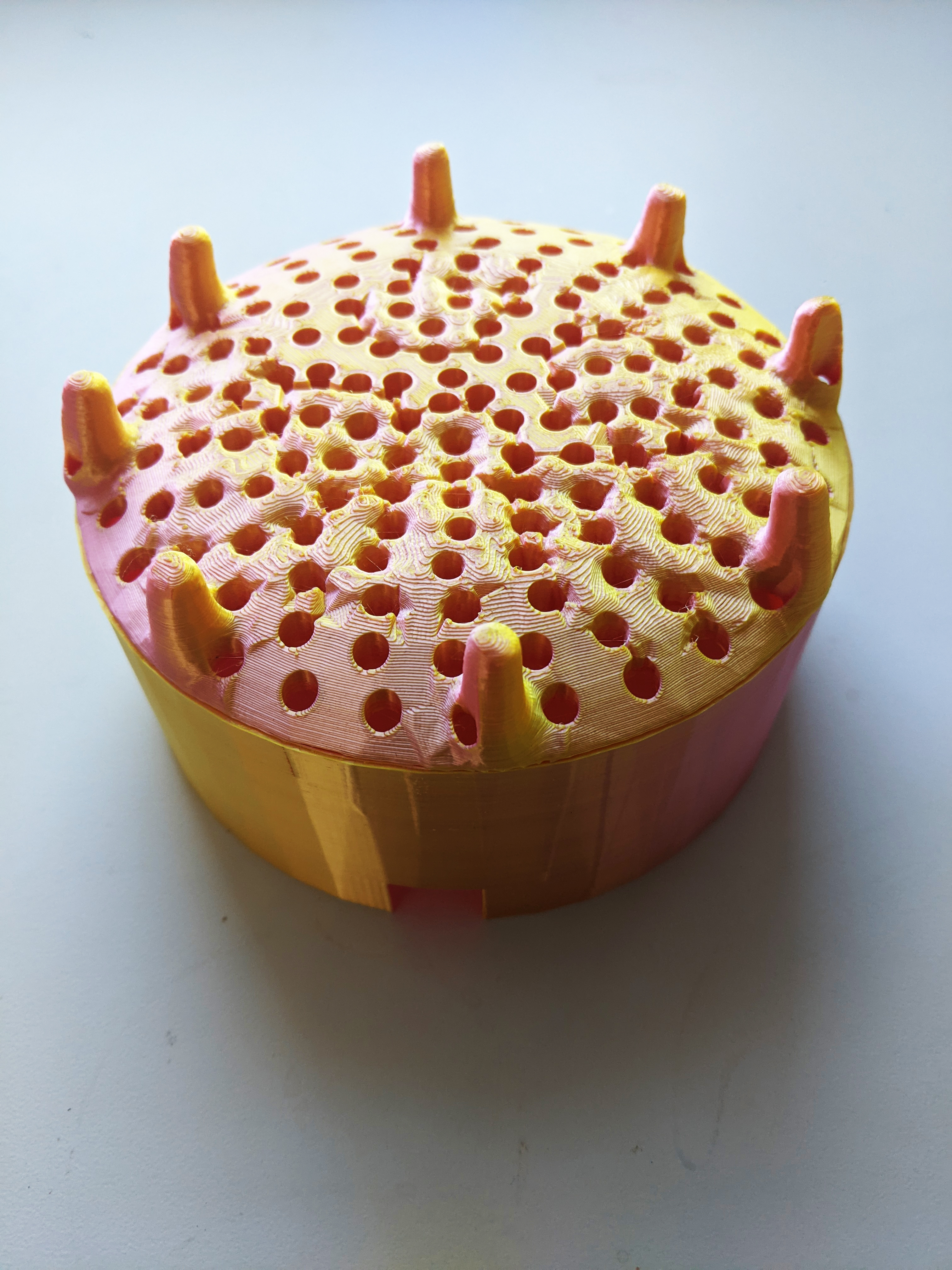

Artificial Intimacy is a project that considers what happens when you produce non-normative chatbots based on diverse identities. This interactive installation has three parts: a video installation, two graphic wall hangings, and an interactive chatbot sculpture. The video installation consists of two video screens that depict Leslie Foster: a queer black bisexual artist whose works concern race, indigenous concepts, gender and identity, and Gorjeoux Moon: a trans non-binary femme poet whose works concern trauma, addiction, sex, and gender, conversing with chatbot versions of themselves. The chatbots were consensually trained on a dataset based on Foster and Moon’s social media profiles. The second part of the installation, the wall hangings, are made of a semi-translucent mesh in a gradient of mauve and gold. They depict silhouettes of Foster and Moon alongside quotes from the video. The third part of the installation is a 3D-printed interactive chatbot sculpture that allows you to talk to either Foster or Moon’s chatbot in a conversation of your choosing.

The three parts of the installation combine to help the viewer explore: what happens when LGBTQAI and BIPOC individuals become the basis for chatbots? What non-normative values do the chatbots represent and how are these incorporated? The project interrogates gendered and racialized alternatives to normative voice assistant programs through two individuals while asking: what values are incorporated into voice assistant programs and how do these values manifest? It also considers: can we create digital copies of ourselves based on our data alone? As well as exploring subsequent questions such as: how much data is necessary and of what type to make a realistic replica? Do we prefer these kinds of models to normative chatbot systems? If the data is of us, but not us, what exactly separates us from our data?

Previous Exhibitions:

NordiCHI 2022

Harvard Art Museum 2022

Upcoming Exhibitions:

DAHJ June 2023